Tokenizer

Tokenizer is a Software as a Service tool to help you replace your existing sensitive data, with tokens. The tokens can then ben be safely distributed without the risk of the original data being leaked. Tokenizer is a cloud-based software as a service solution available as a multi-tenant solution. If you or your organization require a private instance please contact us.

Pricing

Our pricing structure is simple, straight forward and allows you to pay for what you use. There is no Contract no big up front investment tying up your capital. Typical Tokenization, Encryption, or Data Masking solutions can cost tens if not hundreds of thousands of dollars a year. Our tokenization solution, Tokenizer, is a Cloud based software as a service ready the minute you finish signing up. We have a Free entry level option for those who want to try before the buy!

| Basic | Pro | Ultra | Mega | |

|---|---|---|---|---|

| Pricing | Free! | $9.99/month | $99.99/month | $999.99/month |

| Requests | 500/month | 10,000/month | 100,000/month | 1,000,000/month |

| Additional Requests | Hard Limit | $0.002/request | $0.0015/request | $0.001/request |

Sign Up!

We have partnered with RapidAPI, the worlds largest API Hub, to provide enterprise grade API Gateway Services to our customers. All of our API services can be purchased and managed through RapidAPI Hub. Simply click the RapidAPI image below to be brought to our Tokenizer API, register and start tokenizing!

How does Tokenizer work?

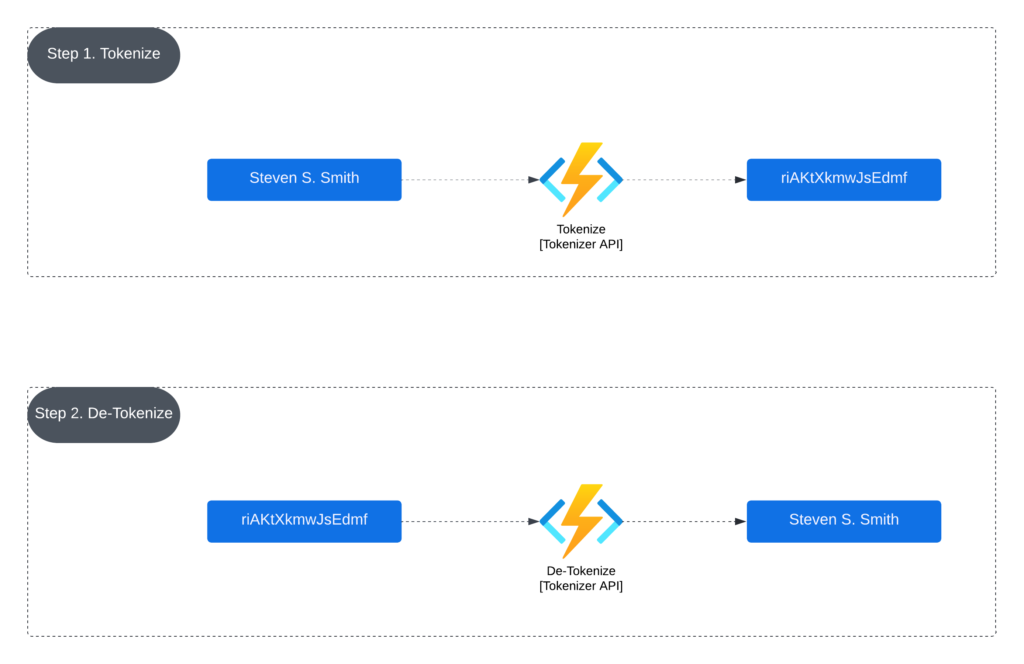

We have worked hard to make the process as simple as possible. Call our API with your data, and we will hand you back a token that you can redeem for your original data.

We store your data using AES 256 bit encryption with either our vendor-provided key or with your key if prefer to provide one. Then when you are ready, hand us back your token and your key if you provided your own, and we will hand you back your original data.

Securing your data does not have to be expensive or complex!

What is Tokenization?

Tokenization is the process of replacing actual sensitive data elements with non-sensitive data elements that have no exploitable value for data security purposes. Security-sensitive applications use tokenization to replace sensitive data, such as personally identifiable information (PII) or protected health information (PHI), with tokens to reduce security risks.

De-tokenization returns the original data element for a provided token. Applications may require access to the original data, or an element of the original data, for decisions, analysis, or personalized messaging. To minimize the need to de-tokenize data and to reduce security exposure, tokens can retain attributes of the original data to enable processing and analysis using token values instead of the original data.

Standard Use Cases:

- Securing data in systems where no existing field-level encryption or tokenization exists.

- Secure data passing through partner services without having to worry about leaking data.

- Use in development or test systems as a way to securely use production data in lower environments.

More Tokenization Information:

-

Tokenization 101

As data breaches become more frequent and sophisticated, data security has become a top concern for businesses of all sizes. One of the most effective ways to protect sensitive data is through tokenization, a process that replaces sensitive information with a unique identifier called a token. In this article, I will explore the benefits of…